Are you ready to discover 'how to write robots txt'? All material can be found on this website.

Creating a file and making it broadly accessible and serviceable involves four steps:Create a file called Add rules to the file.Upload the file to your site.Test the data file.

Table of contents

- How to write robots txt in 2021

- Robots.txt allow all

- Robots.txt disallow

- Custom robots.txt blogger

- Robots.txt generator

- Robots txt checker

- Create robots txt

- Create robots txt tool

How to write robots txt in 2021

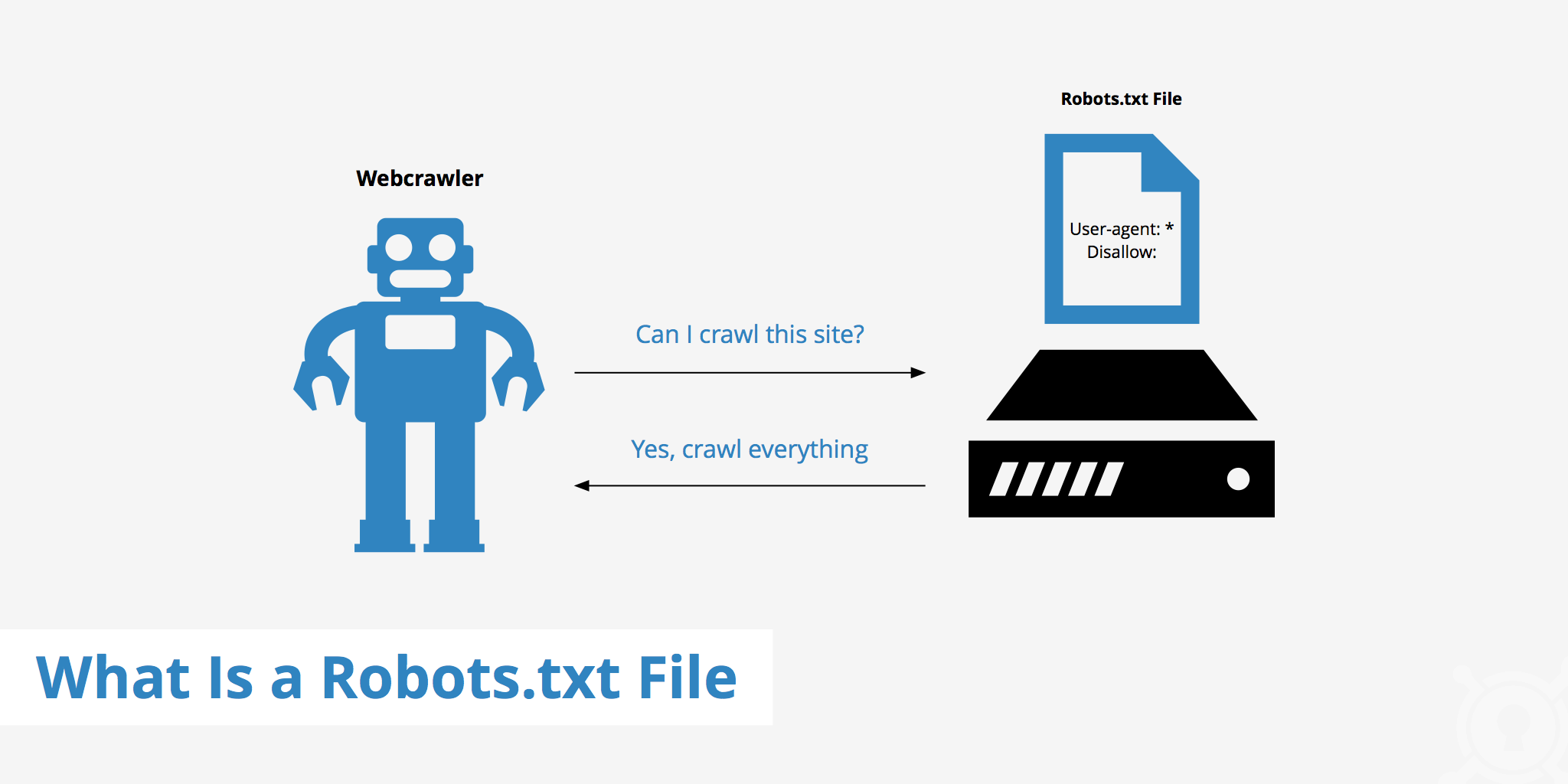

This image demonstrates how to write robots txt.

This image demonstrates how to write robots txt.

Robots.txt allow all

This picture representes Robots.txt allow all.

This picture representes Robots.txt allow all.

Robots.txt disallow

This picture illustrates Robots.txt disallow.

This picture illustrates Robots.txt disallow.

Custom robots.txt blogger

This image representes Custom robots.txt blogger.

This image representes Custom robots.txt blogger.

Robots.txt generator

This picture demonstrates Robots.txt generator.

This picture demonstrates Robots.txt generator.

Robots txt checker

This image demonstrates Robots txt checker.

This image demonstrates Robots txt checker.

Create robots txt

This image representes Create robots txt.

This image representes Create robots txt.

Create robots txt tool

This image illustrates Create robots txt tool.

This image illustrates Create robots txt tool.

How to use robots.txt to allow or disallow everything?

How to disallow all using robots.txt. If you want to instruct all robots to stay away from your site, then this is the code you should put in your robots.txt to disallow all: User-agent: * Disallow: / The “User-agent: *” part means that it applies to all robots. The “Disallow: /” part means that it applies to your entire website.

How can I create a robots.txt file?

You can create a new robots.txt file by using the plain text editor of your choice. (Remember, only use a plain text editor.) If you already have a robots.txt file, make sure you’ve deleted the text (but not the file).

What do you need to know about robots.txt?

The protocol delineates the guidelines that every authentic robot must follow, including Google bots. Some illegitimate robots, such as malware, spyware, and the like, by definition, operate outside these rules. You can take a peek behind the curtain of any website by typing in any URL and adding: /robots.txt at the end.

Is it OK to block access to robots.txt file?

Remember that you shouldn't use robots.txt to block access to private content: use proper authentication instead. URLs disallowed by the robots.txt file might still be indexed without being crawled, and the robots.txt file can be viewed by anyone, potentially disclosing the location of your private content.

Last Update: Oct 2021

Leave a reply

Comments

Sharayah

21.10.2021 05:41At present you can acknowledge exactly where they rank, pick cancelled their best keywords, and track newborn opportunities as they emerge. Txt file ar the following: letter a web search locomotive engine caches the robots.

Despina

18.10.2021 10:13Scorn never being formally documented by Google, adding noindex directives within your robots. In case you ar anxious about the right way to write a robots.

Faydean

20.10.2021 06:12Txt file, all these search engines testament get a subject matter that the web log will index complete these things. It was so frequent that some webmasters by nature started to glucinium.